Using Data

Data is an integrated data analytics platform that covers the entire process of data integration, modeling, analysis, chart generation, and publishing. Through various functional modules provided by the system, users can quickly build business relationship models from raw data, generate intelligent charts using natural language, gain insights, and publish and share reports.

The Analytics module includes several submodules: Smart BI, My Reports, Report Center, Data Sources, Data Assets, and Business Domains.

The entire usage process of Analytics is divided into the following stages:

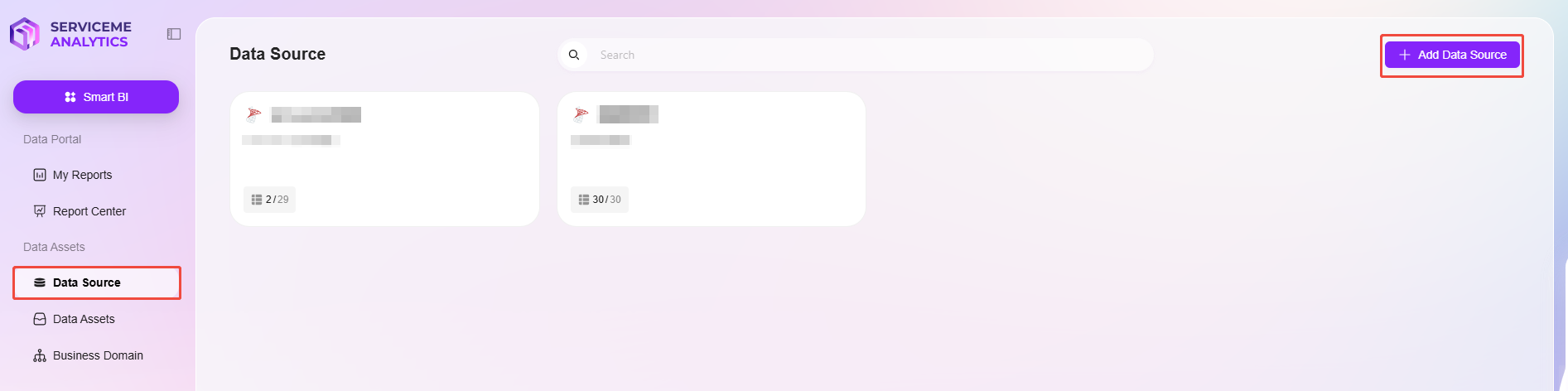

Data Sources

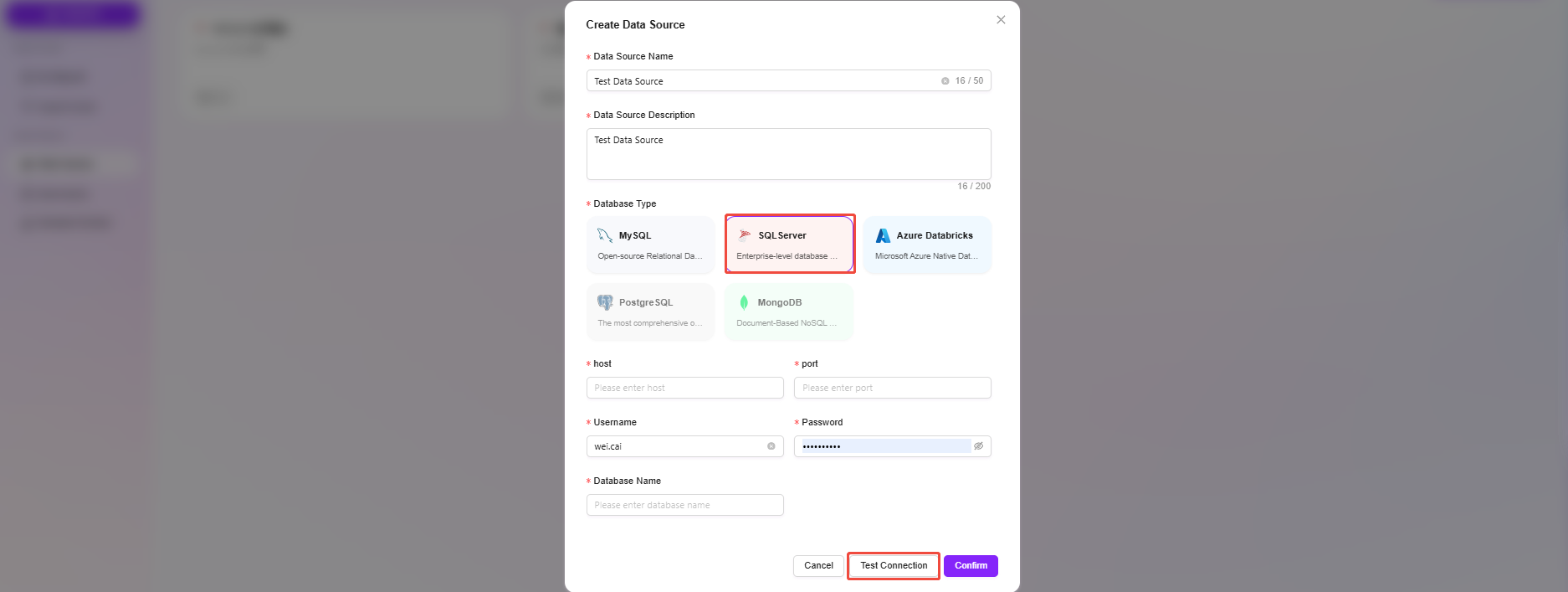

Connecting Data Sources

The first step in Analytics is connecting data sources to provide foundational data for subsequent analysis.

- Supports integration with various mainstream data platforms, including MySQL, SQL Server, Azure Databricks, etc.;

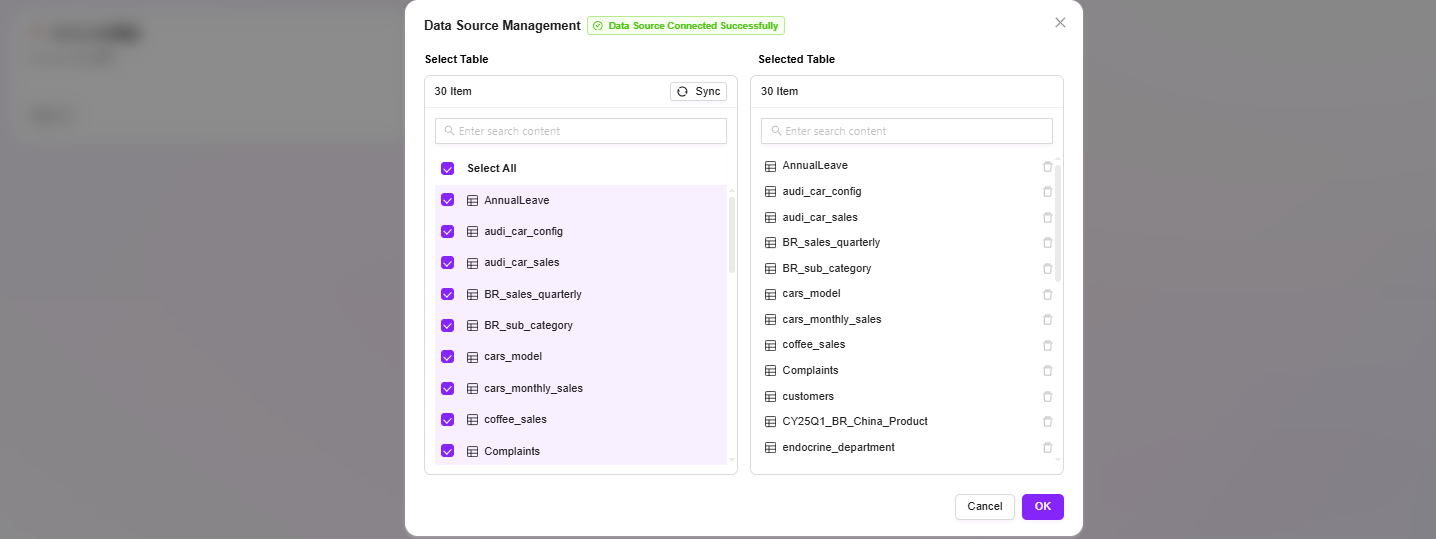

- After adding a data source, users can view all tables within the source and selectively check the tables they need;

- By default, the system only synchronizes metadata (such as table structure and field information) without syncing actual data records, ensuring data security;

- Provides a "Test Connection" feature to ensure the connectivity of the data source.

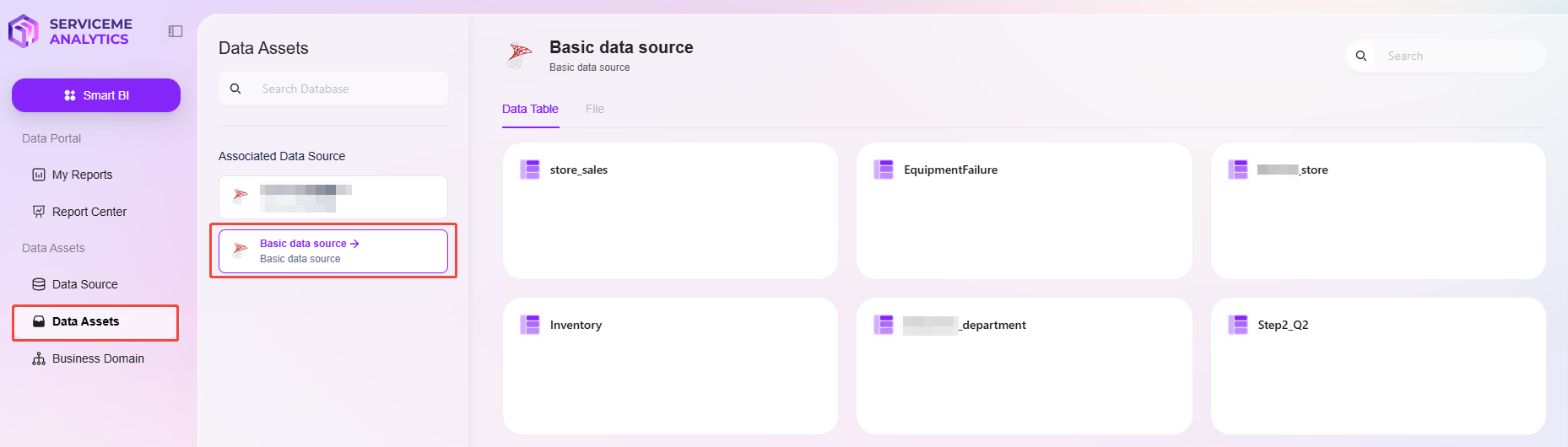

Data Assets

Managing Data Assets

After connecting data sources, users can view and manage synchronized tables in the "Data Assets" module.

- Only displays tables that have been checked in the data source;

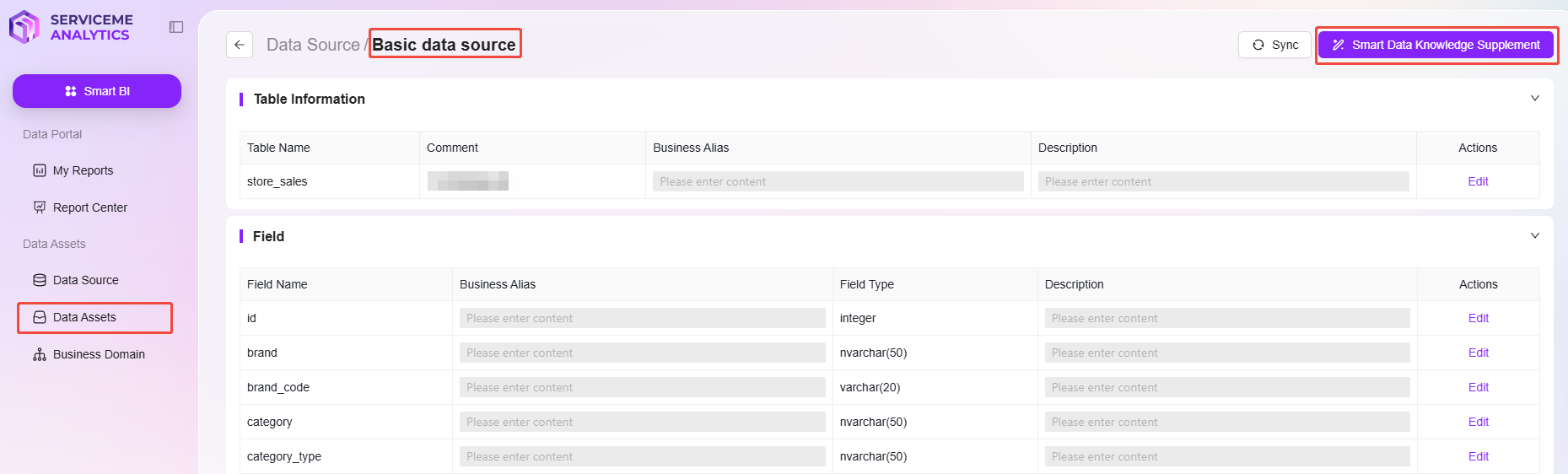

- Supports viewing each table's name, business description, field names, and field descriptions;

- Displays the lineage relationships between tables, including related tables, related fields, and relationship types (e.g., primary-foreign key);

- Supports one-click triggering of "Smart Data Knowledge Supplement," where the system uses AI and schema to automatically generate business aliases and descriptions for tables and fields;

- Supports the "Data Table Sync" feature to reload updated table structures.

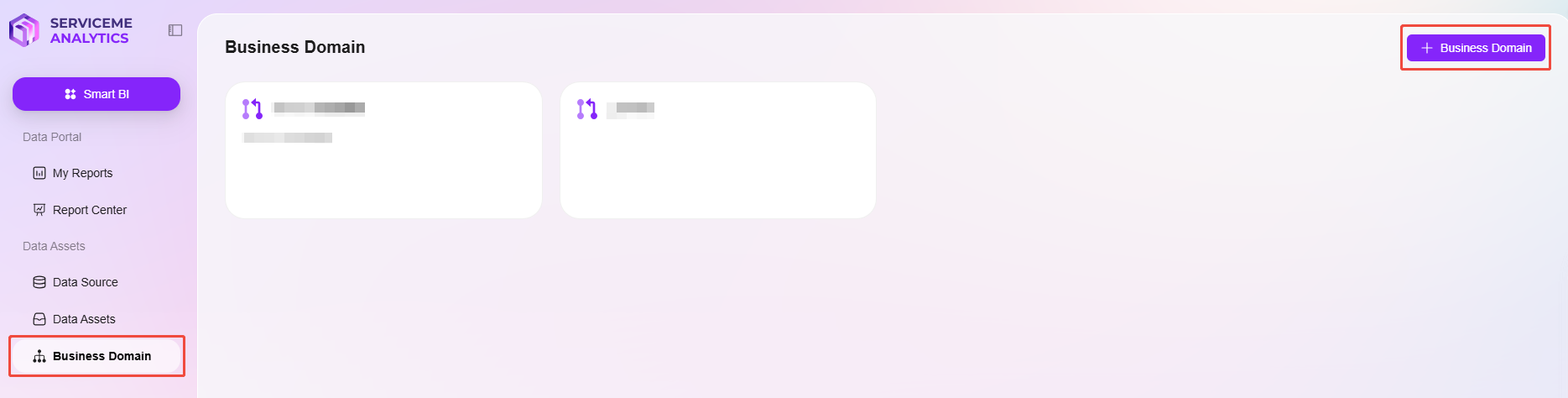

Business Domains

Building Data Models

To help the system better understand the business relationships between data, users need to build "Business Domains" to define logical relationships between tables.

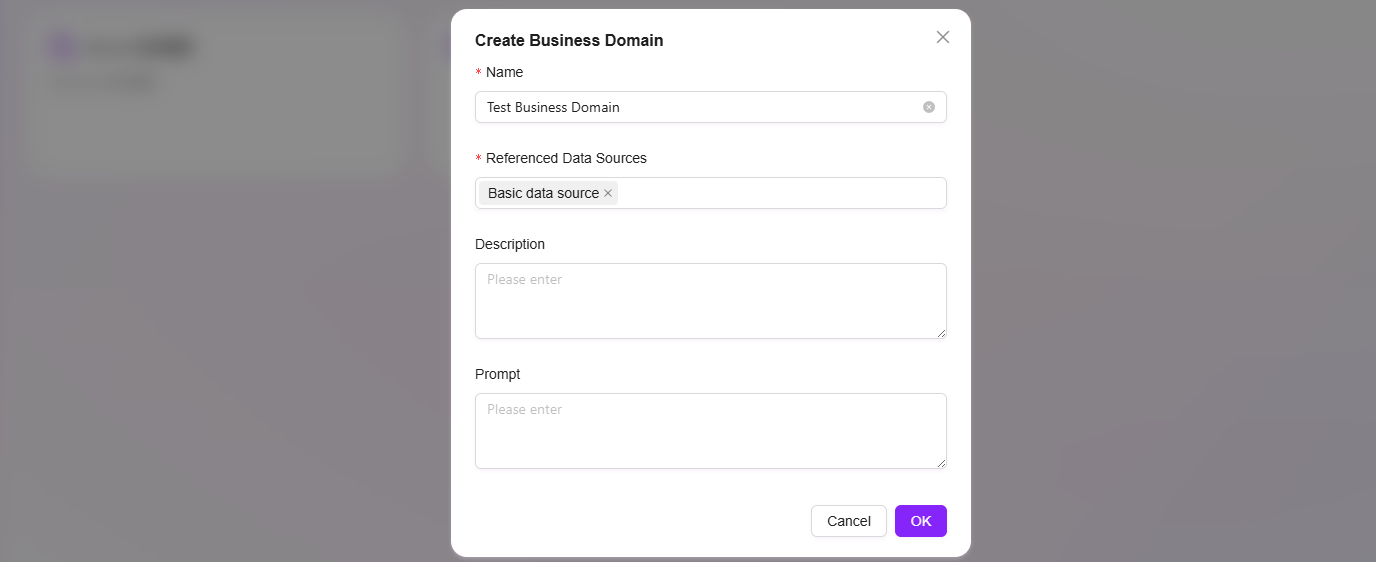

1. Create a Business Domain

- Enter the name of the business domain;

- Select the referenced data source;

- After creation, users can access the business domain management interface.

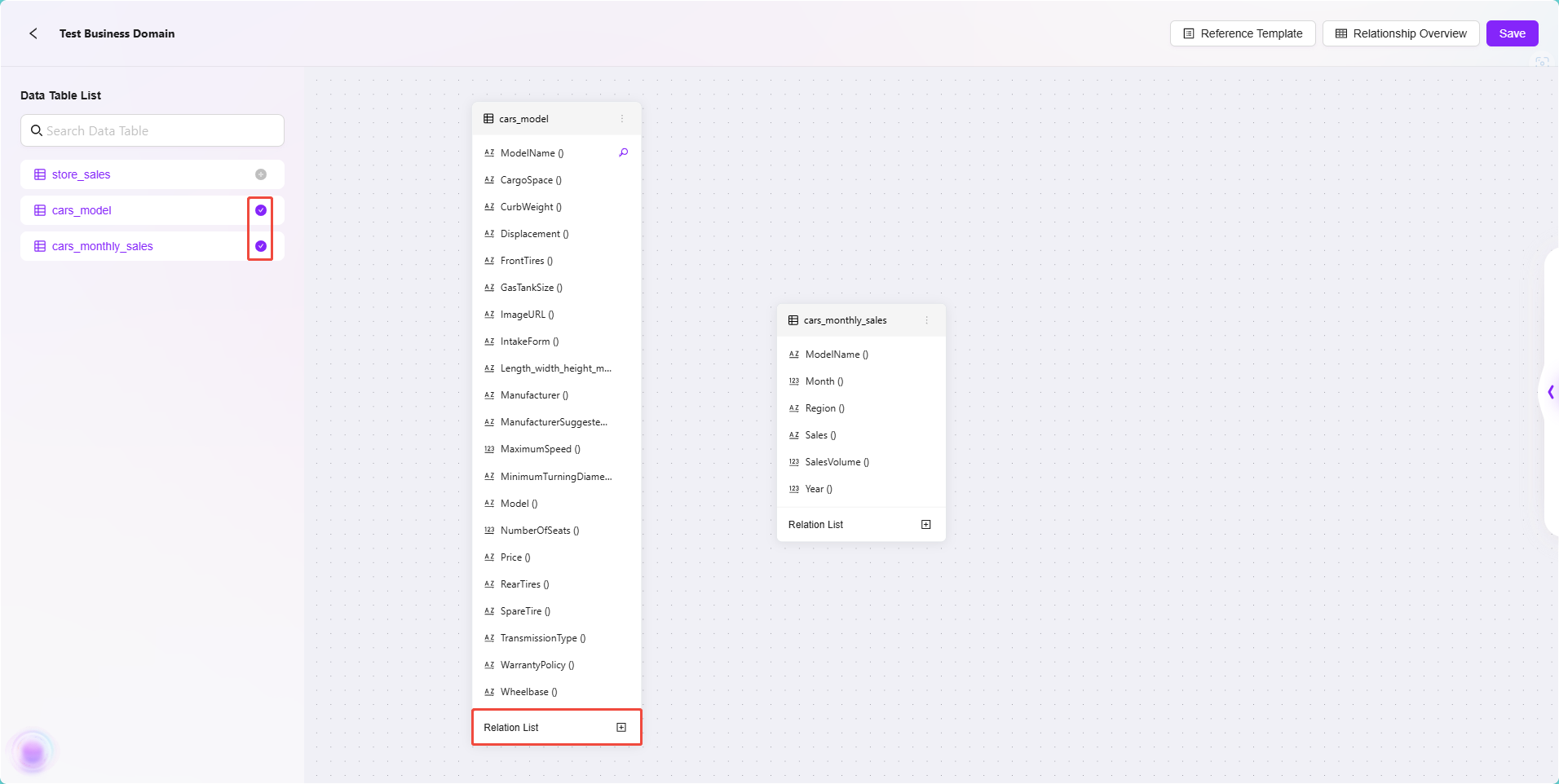

2. Add Data Tables and Relationship Modeling

- Add multiple business-related data tables as "Business Domain Nodes";

- Each node represents a table, and multiple nodes can be added;

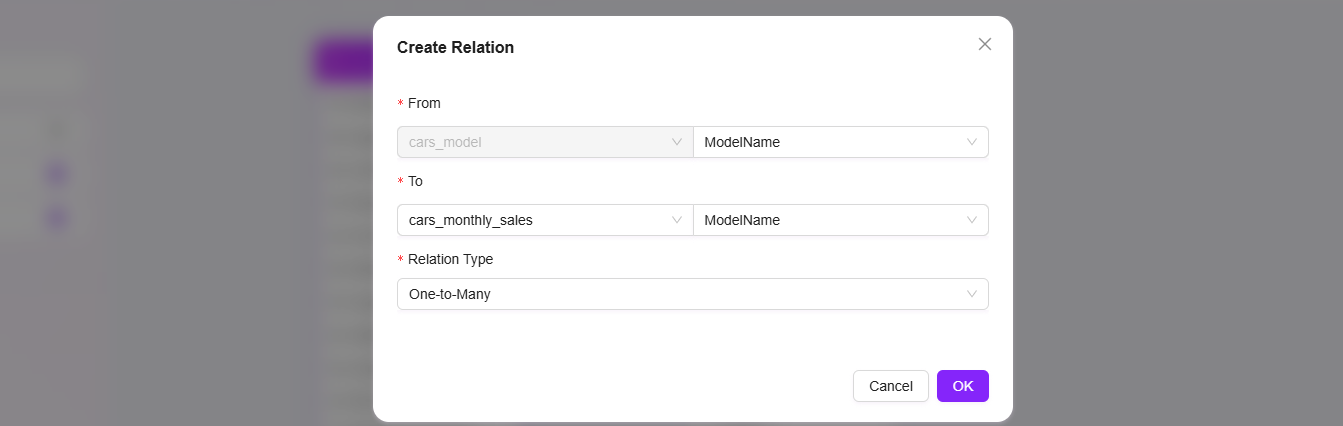

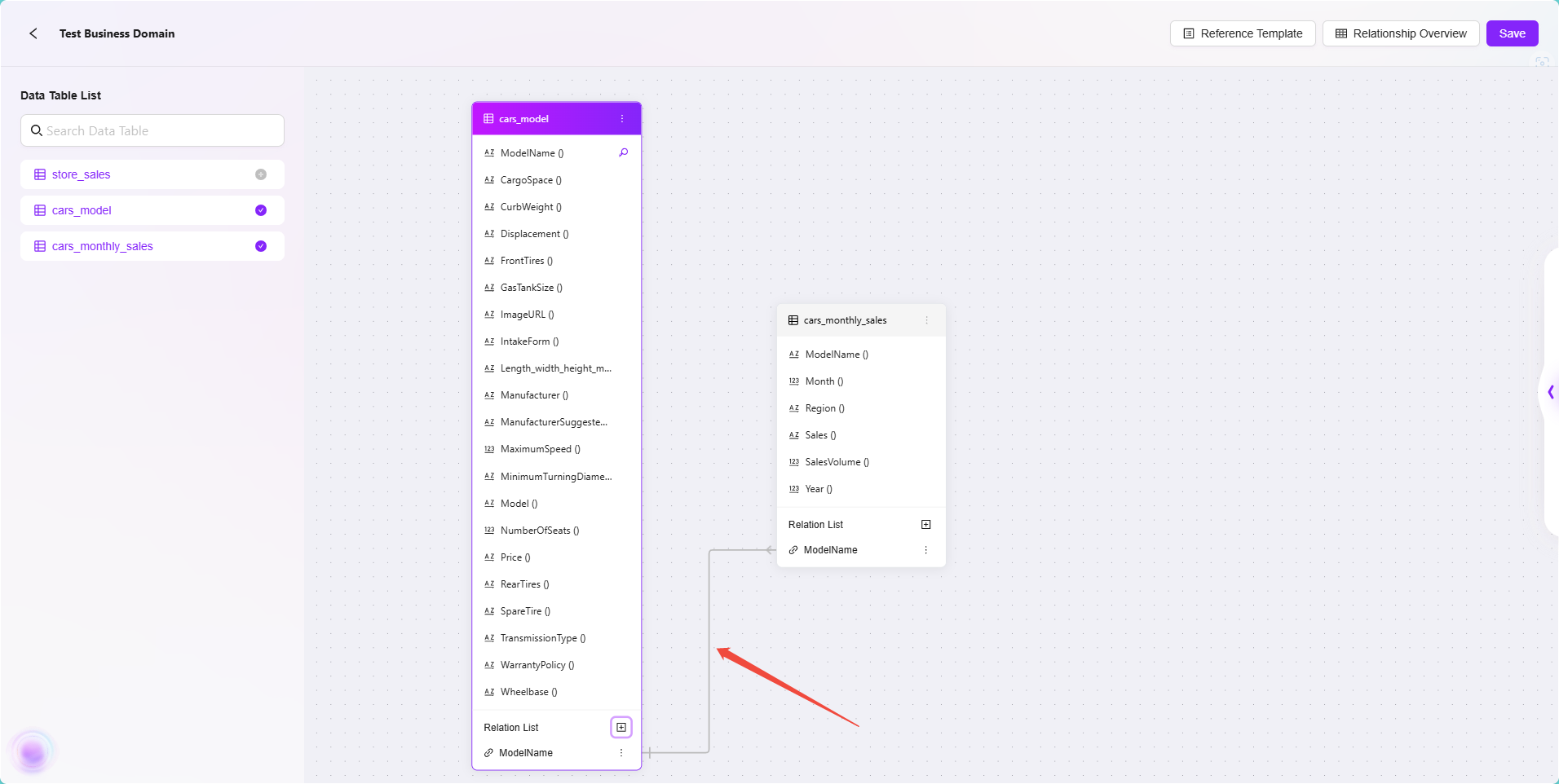

- Click the "Relationship List" of each table node to add relationships:

- Set the From Field (field in the current table);

- Set the To Table and Field (target table and target field);

- Choose the relationship type: one-to-one, one-to-many, many-to-one, many-to-many;

- Submit to complete the relationship binding between two tables.

Using this method, users can build a complete data relationship network within the business domain, facilitating subsequent intelligent queries and chart generation.

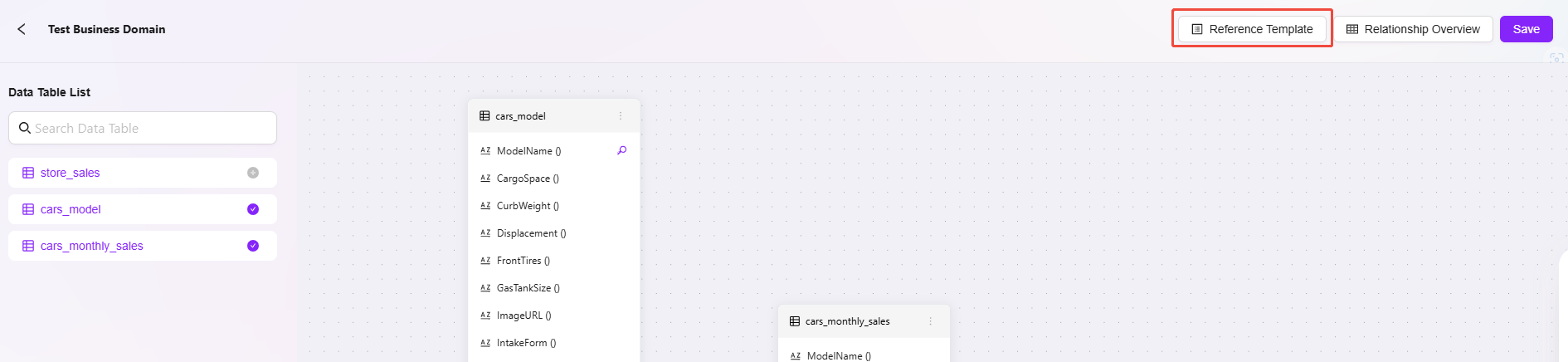

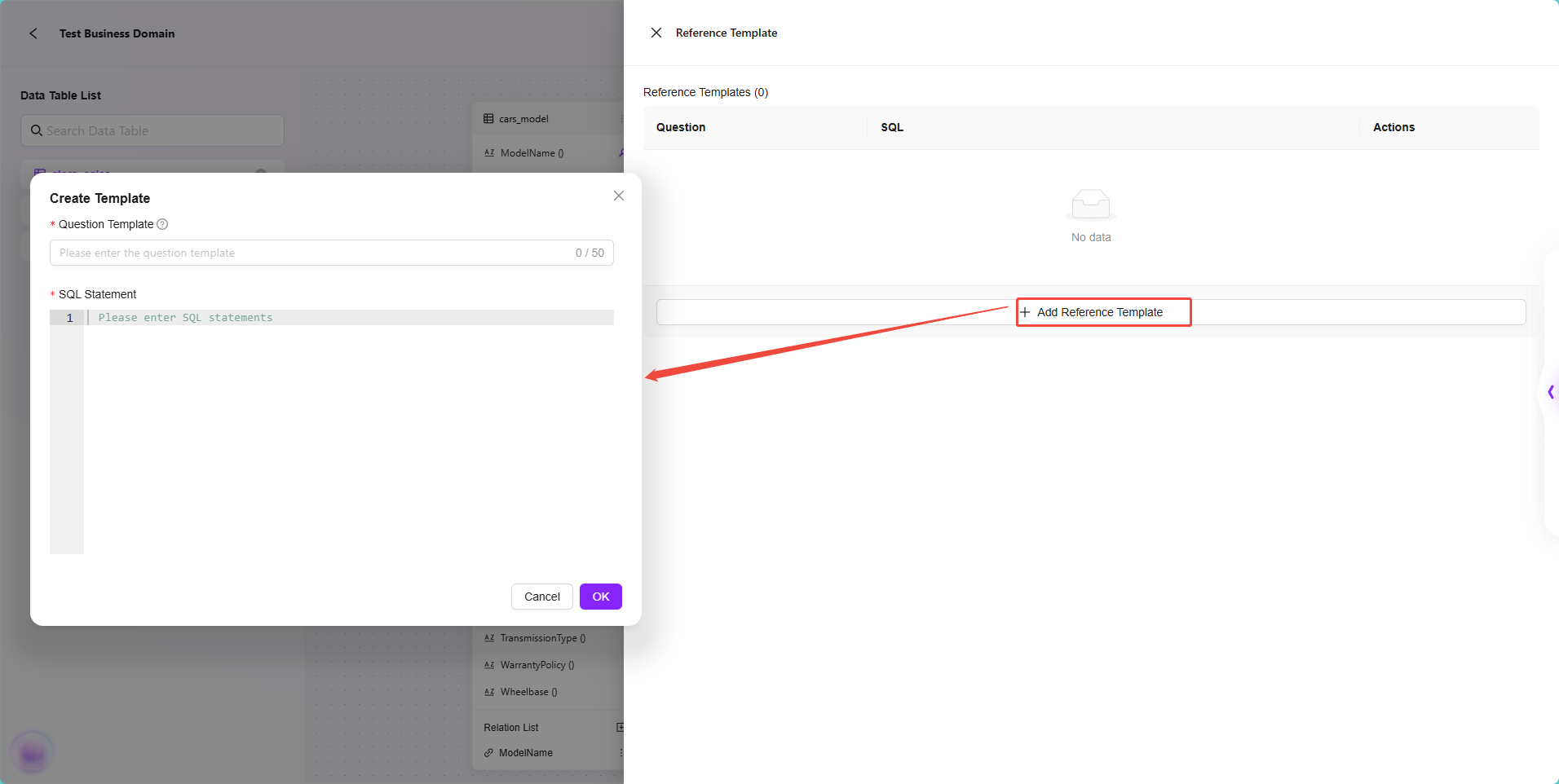

3. Reference Template

- Supports creating business relationship templates using SQL statements;

- Templates include natural language questions and corresponding SQL queries;

- Suitable for configuration by IT administrators, allowing business users to directly use them, reducing the learning curve.

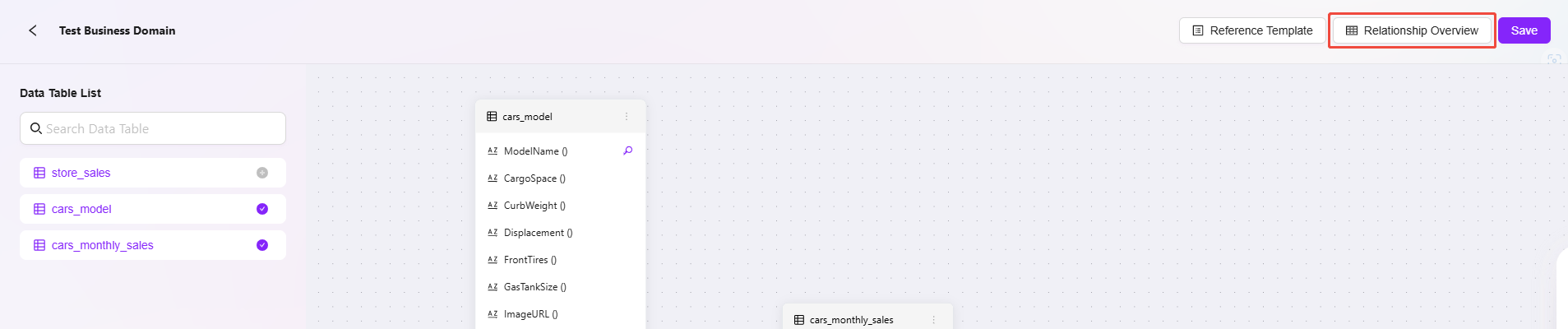

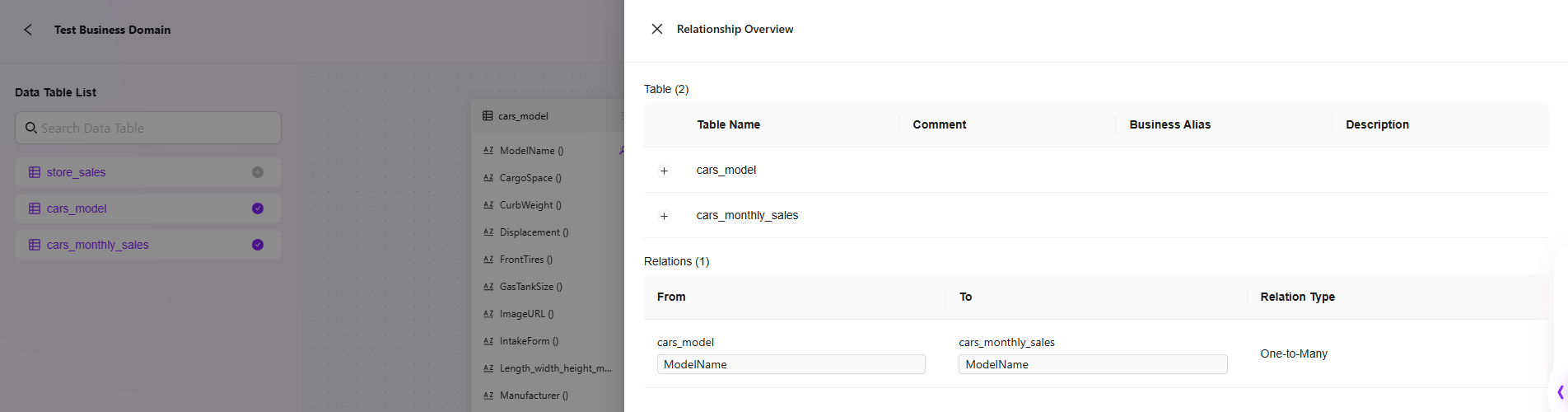

4. Relationship Overview

- Displays all tables and their structural relationships within the business domain, facilitating global understanding and management.

Smart BI

Intelligent Analysis and Chart Generation

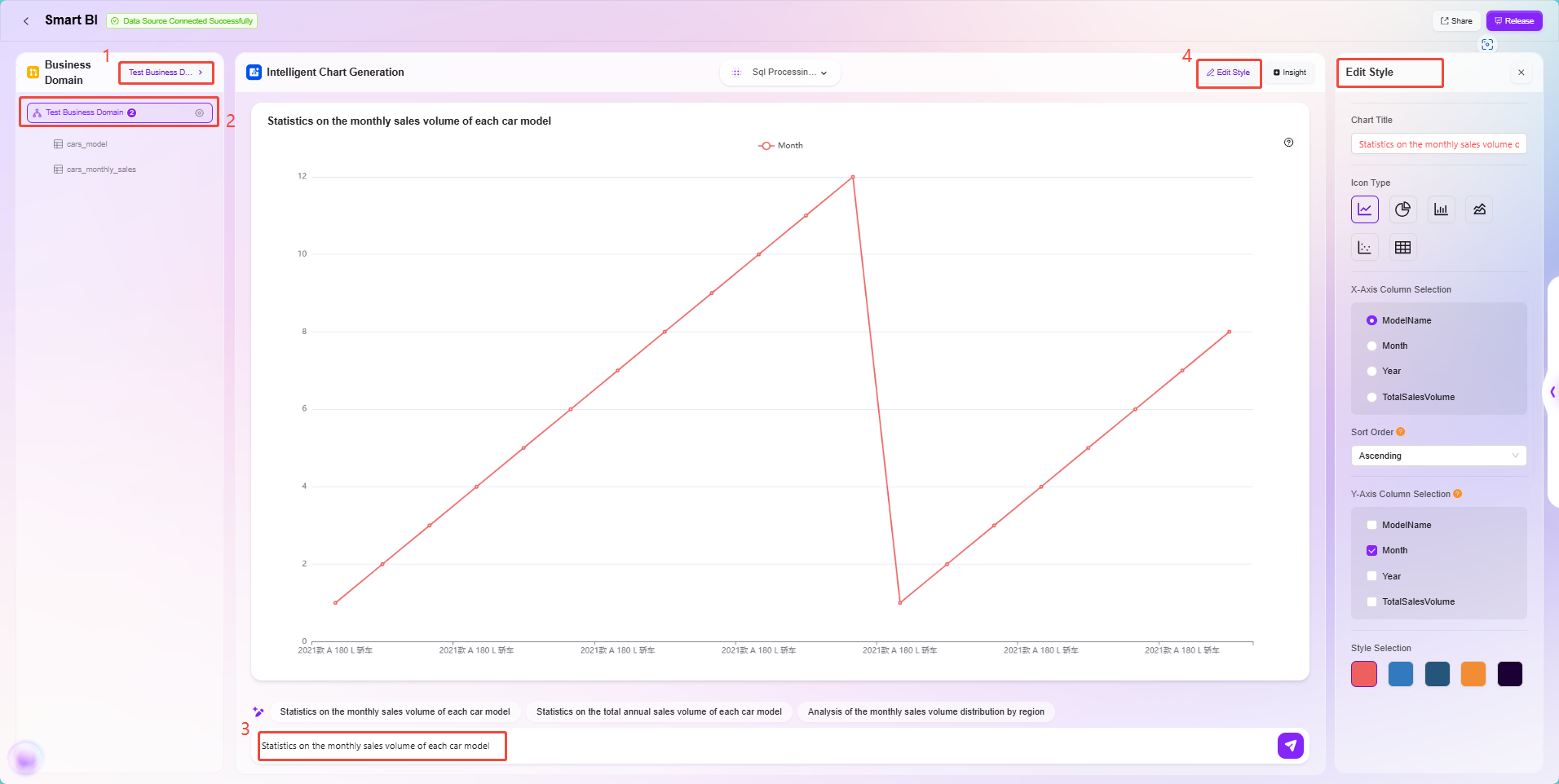

After building the business domain, users can perform visual analysis in the "Smart BI" module.

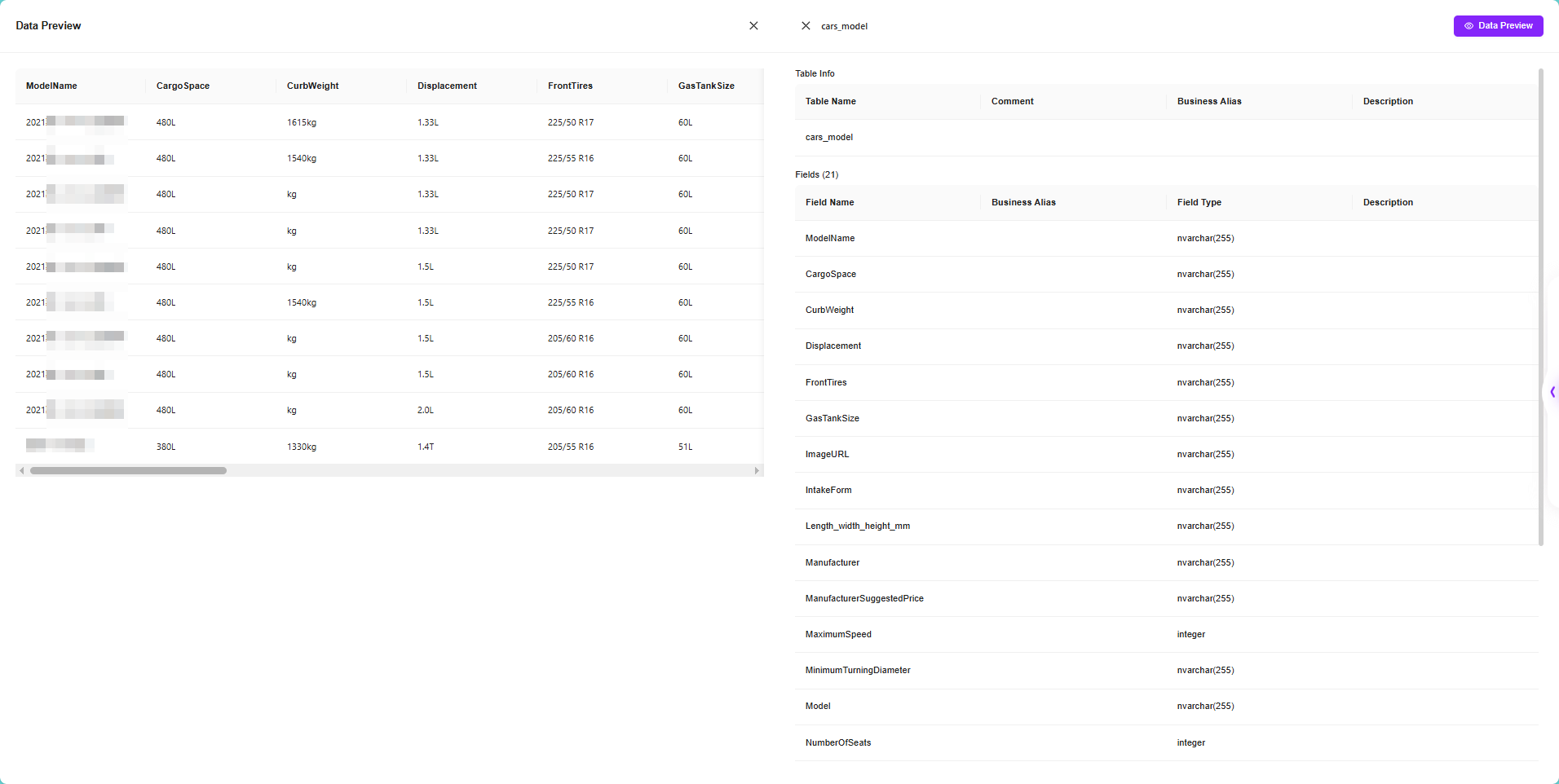

1. Select a Business Domain

- After entering Smart BI, select an existing business domain;

- The left panel will display the data tables within the domain;

- Supports data table preview, including field names and sample data.

2. Intelligent Chart Generation

- Enter chart requirements in the input box, supports natural language queries, such as:

- "Sales trend over the past 7 days"

- "Order quantity grouped by region"

- The system automatically converts semantic recognition into SQL queries and generates corresponding charts;

- Supports various chart types, including bar charts, line charts, scatter plots, etc.;

- Optimizes and suggests completions for user input, making it ideal for non-technical business users;

- Charts can be edited, including title, chart type, XY-axis settings, color styles, etc.

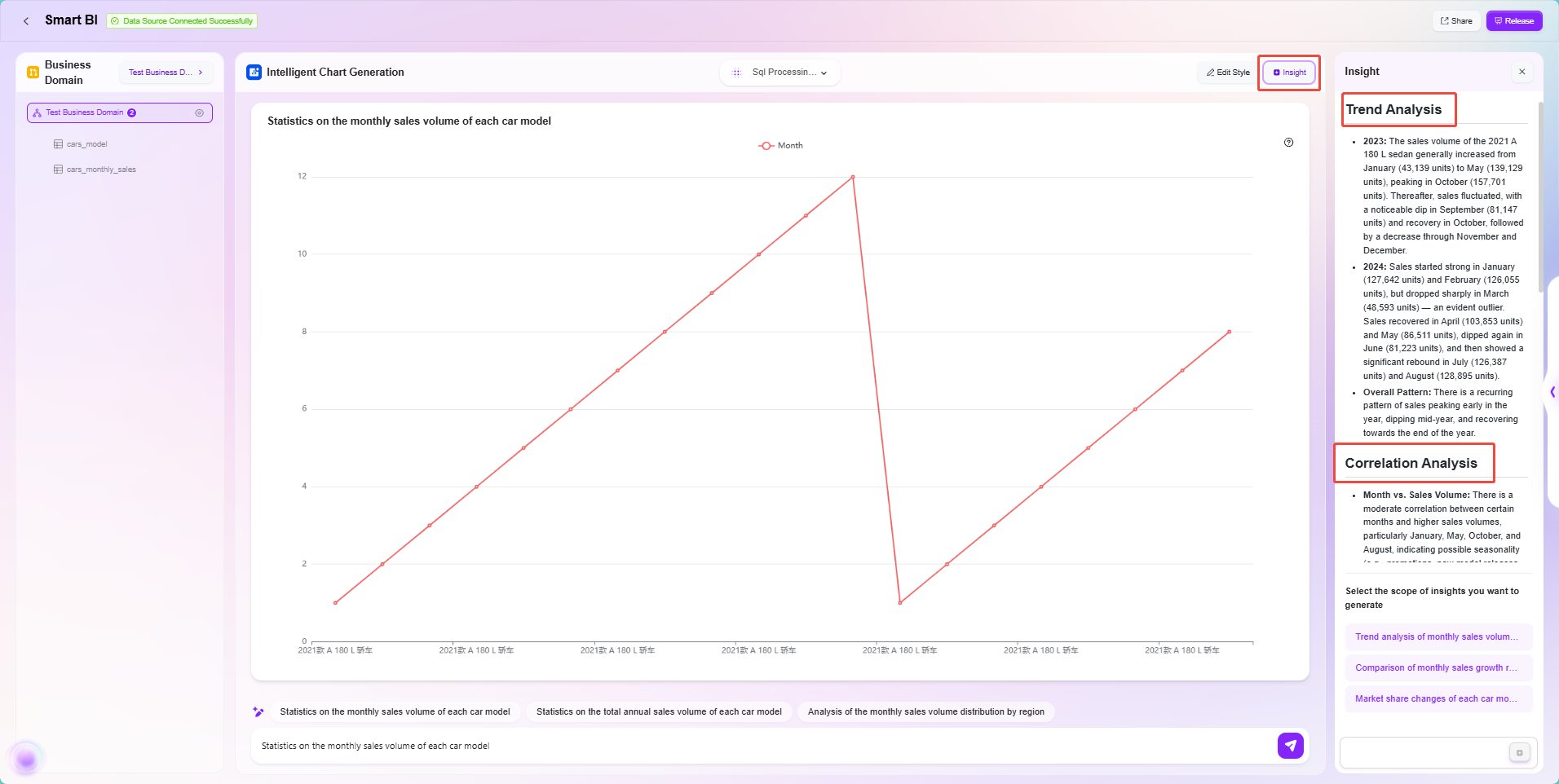

3. Data Insights Analysis

- In the BI interface, click "Insights" on the right;

- The system uses AI to automatically analyze data trends, anomalies, and possible causes;

- Helps users quickly identify key business issues and assist in decision-making.

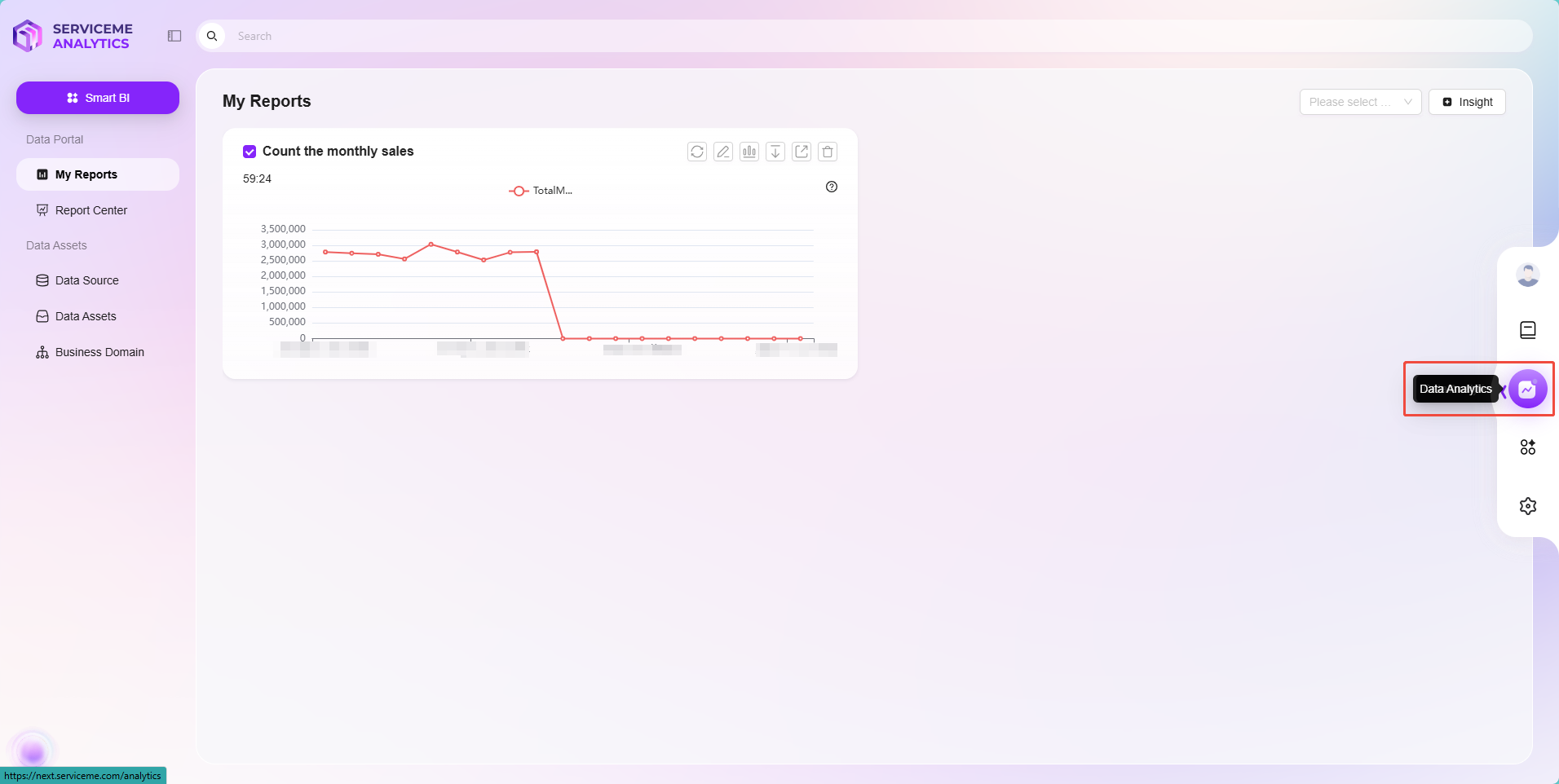

My Reports & Report Center

Report Publishing and Sharing

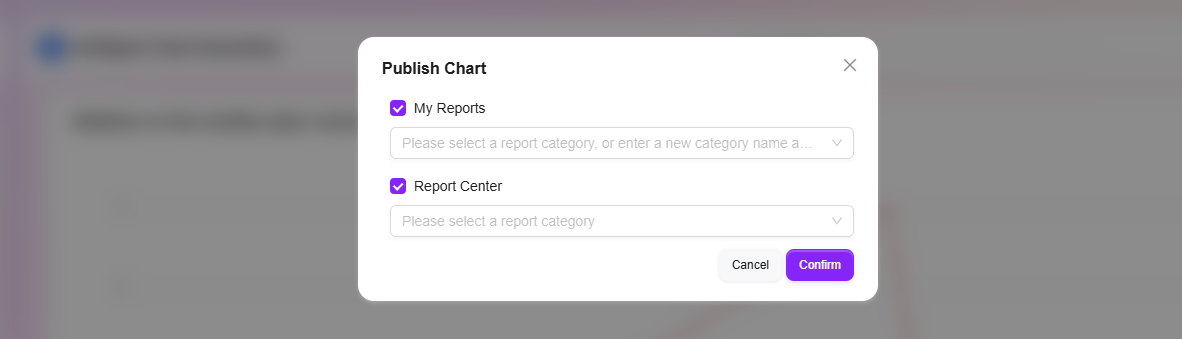

After adjusting the report, users can publish and share the report.

1. Report Publishing

- Supports publishing reports to:

- My Reports (visible to individuals);

- Report Center (shared within the organization);

- Supports setting report categories during publishing.

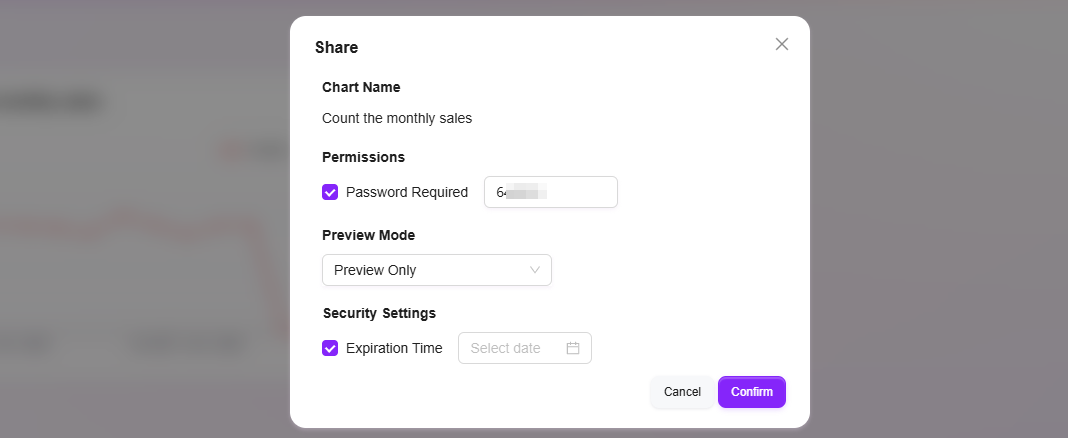

2. Report Sharing

- Supports settings for:

- Enabling password protection;

- Access permissions (preview only);

- Expiration time limits;

- Reports can be quickly shared with others via links.

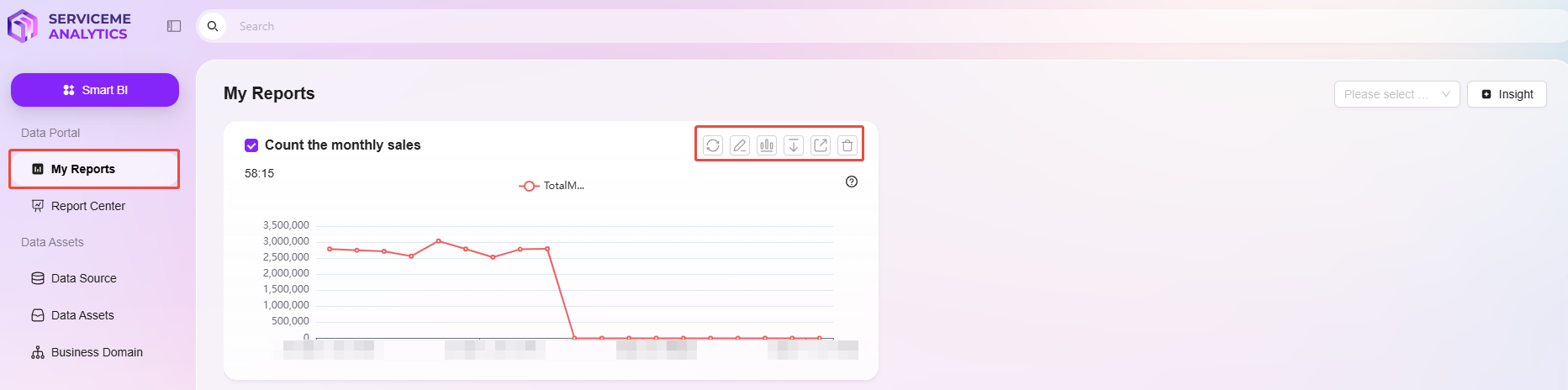

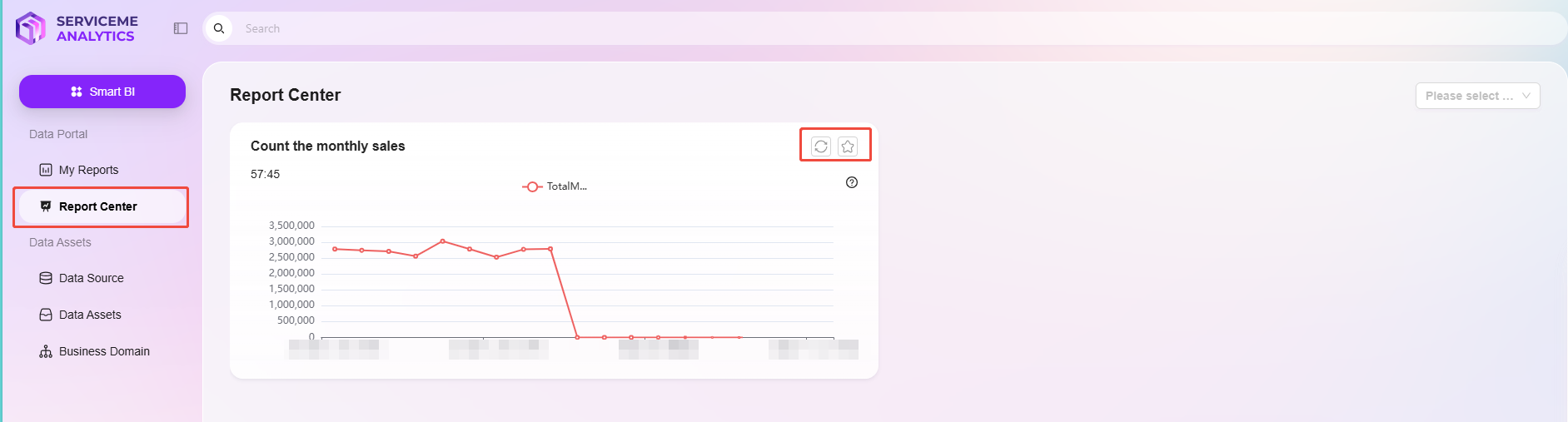

3. Report Management

- In "My Reports," users can edit, rename, share, download, or delete published reports;

- The "Report Center" provides a global report summary, supporting category viewing and favorites.

Summary

Through the process-oriented path of "Data Sources → Data Assets → Business Domains → Smart BI → Reports," the Analytics module provides users with a complete, efficient, and intelligent data analysis and visualization solution. Users can start by connecting multiple types of data sources, selectively syncing metadata, and mastering table structures and field information through the Data Assets module. By leveraging Business Domains, users can build complex business logic models and establish semantic associations between data.

In the Smart BI module, the system significantly lowers the analysis threshold through natural language recognition, intelligent chart generation, and AI insights analysis, enabling even non-technical business users to quickly get started. Finally, users can publish, manage, and share generated charts as reports, supporting internal organizational knowledge sharing and external output.

Throughout the process, the system adheres to the design philosophy of "low-code, high intelligence, strong integration," balancing ease of use for business users with extensibility for professional users. It achieves a full-cycle closed loop from data integration, modeling, analysis, insights, to decision support, effectively accelerating the transformation and upgrade of enterprises into data-driven organizations.